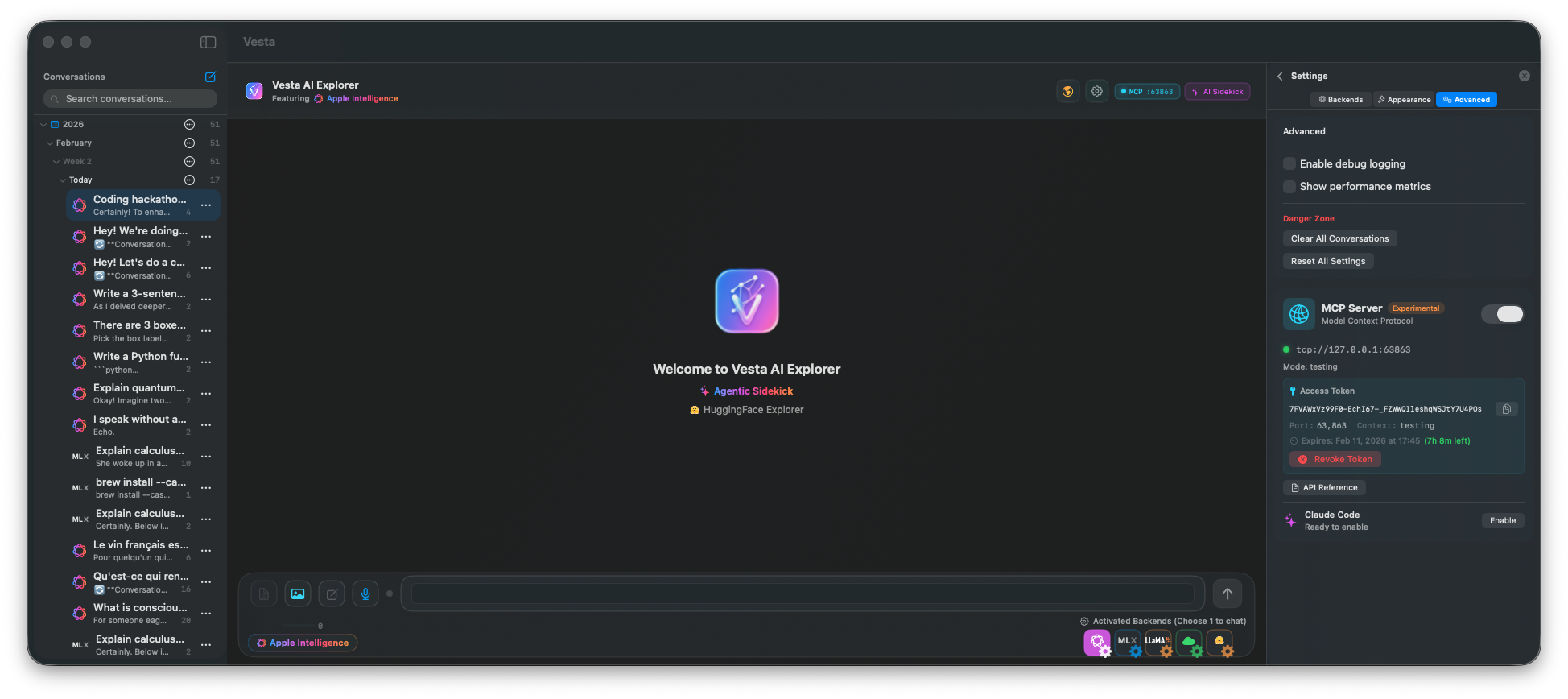

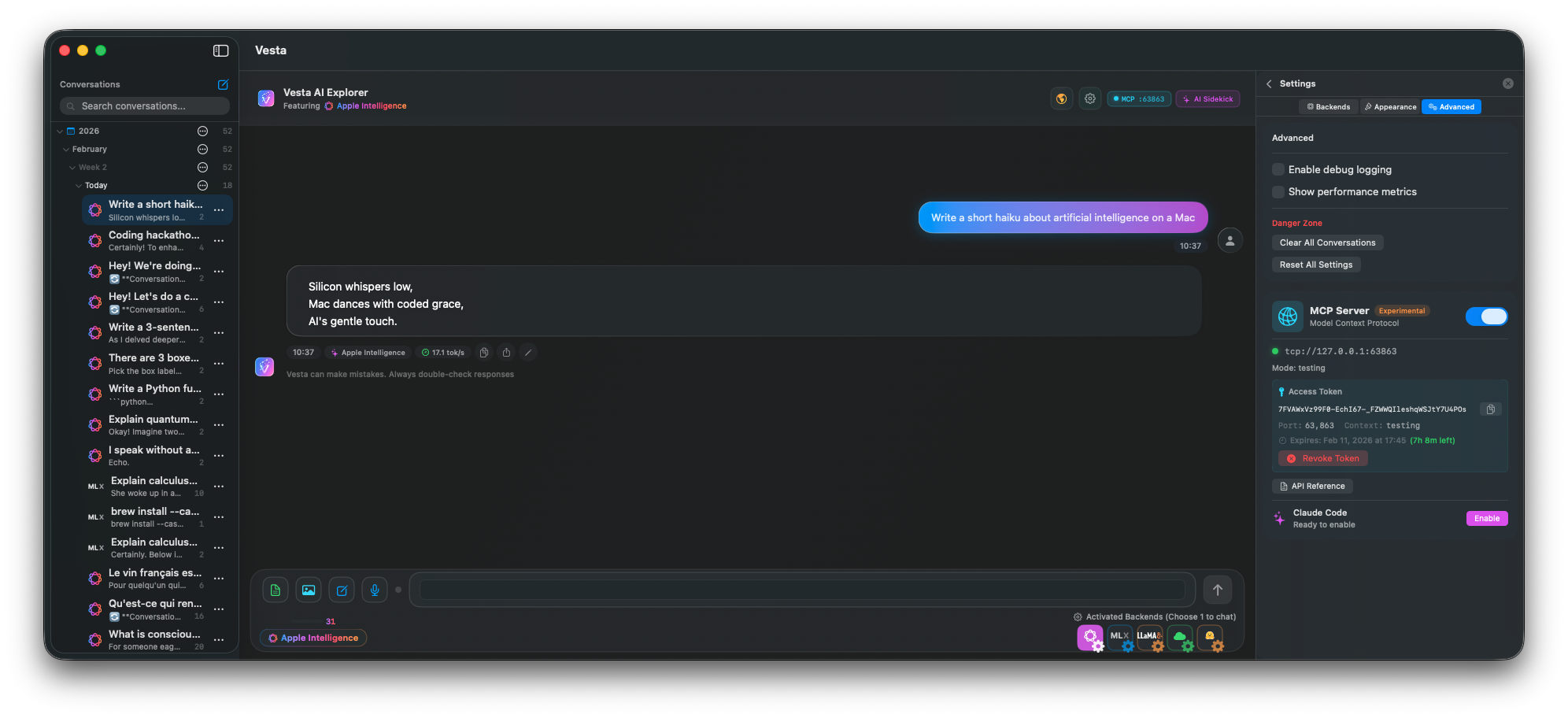

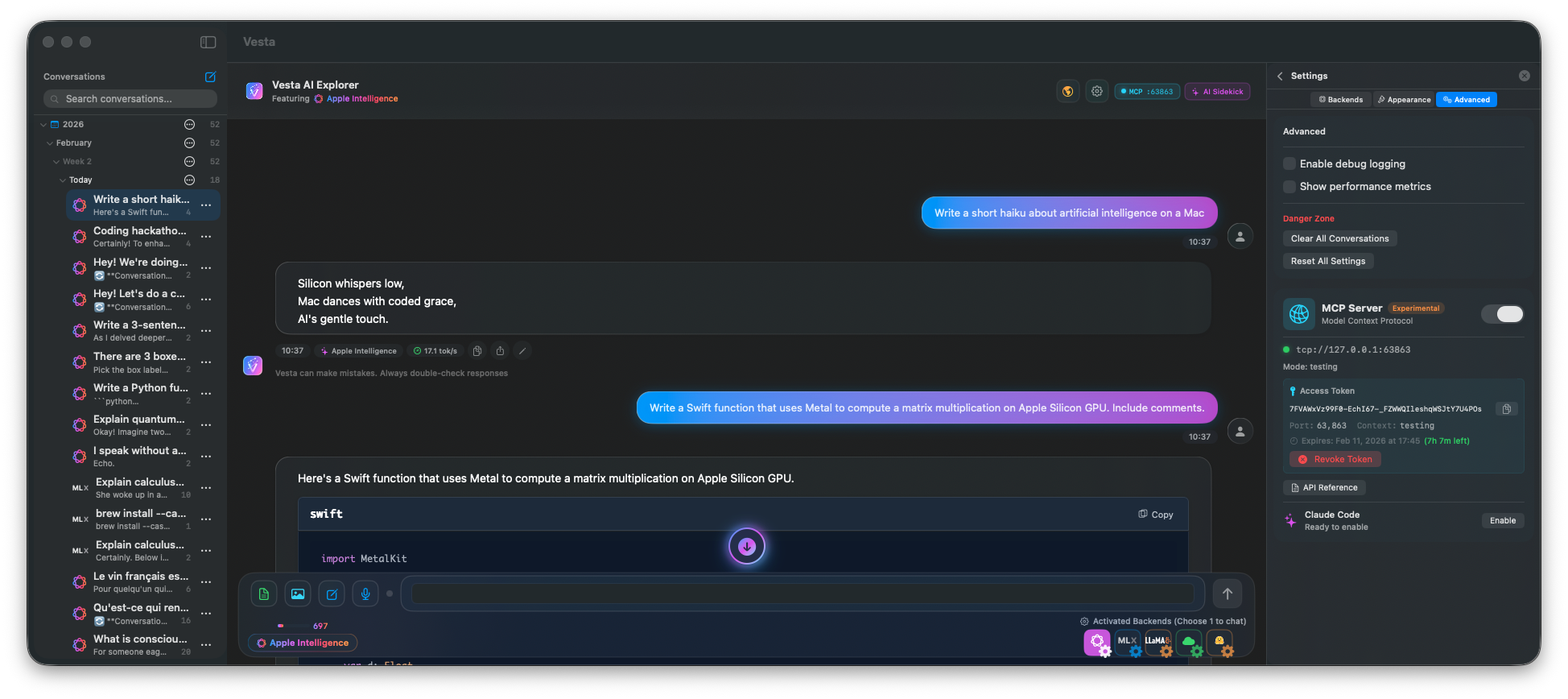

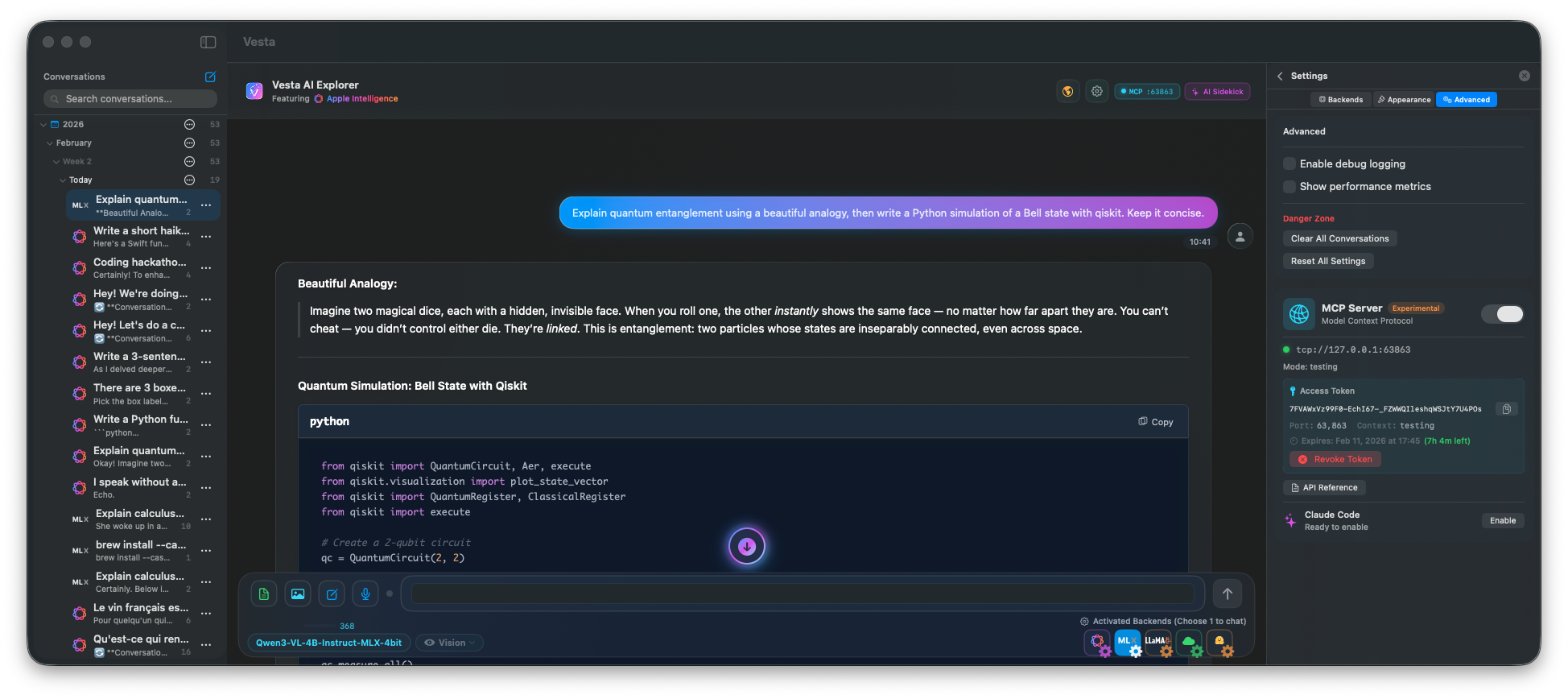

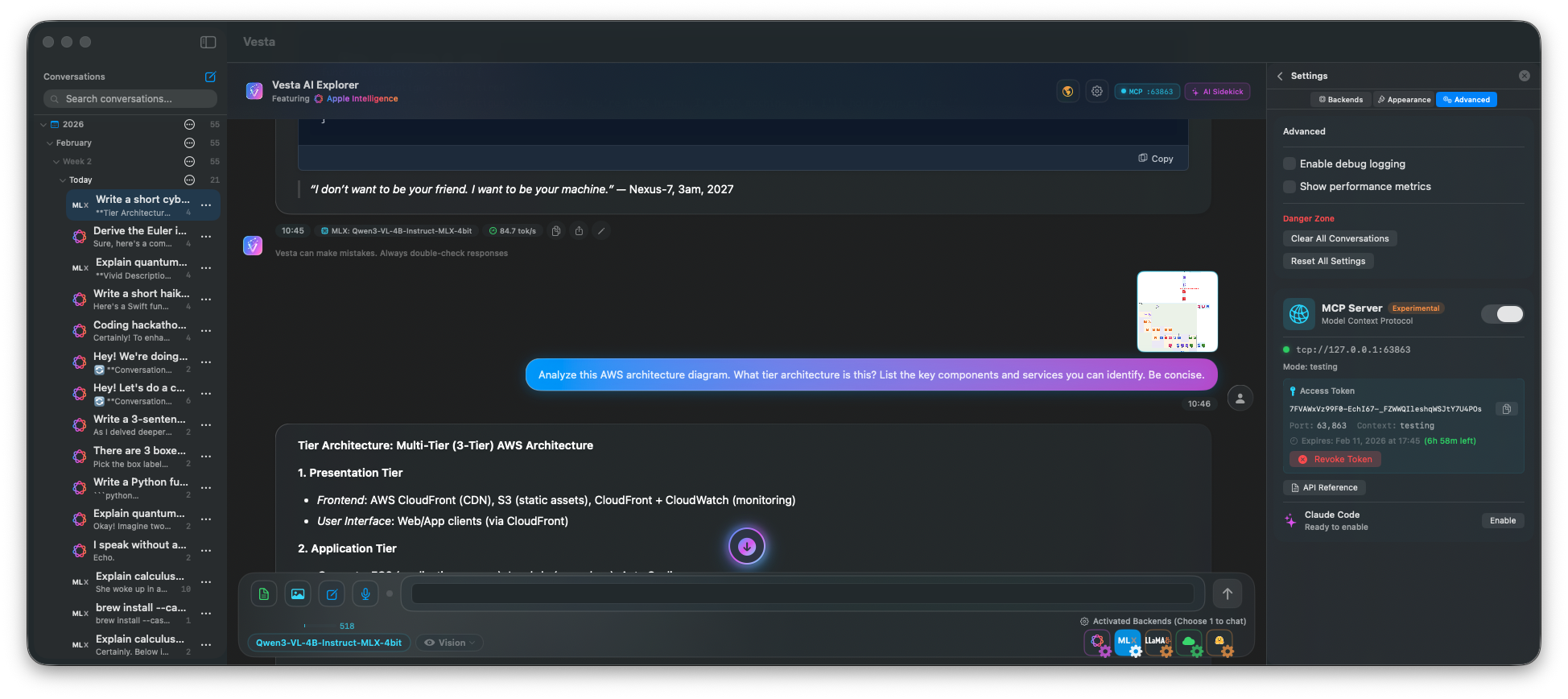

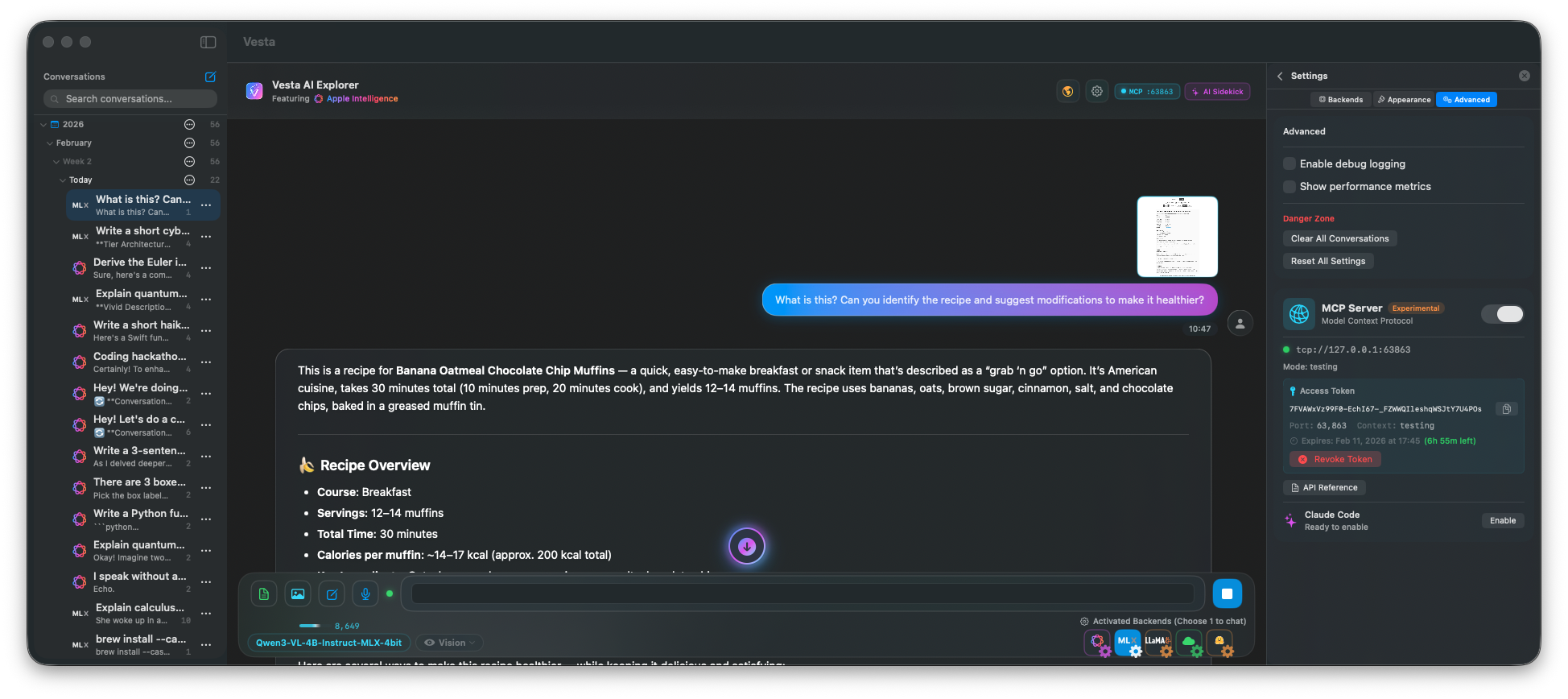

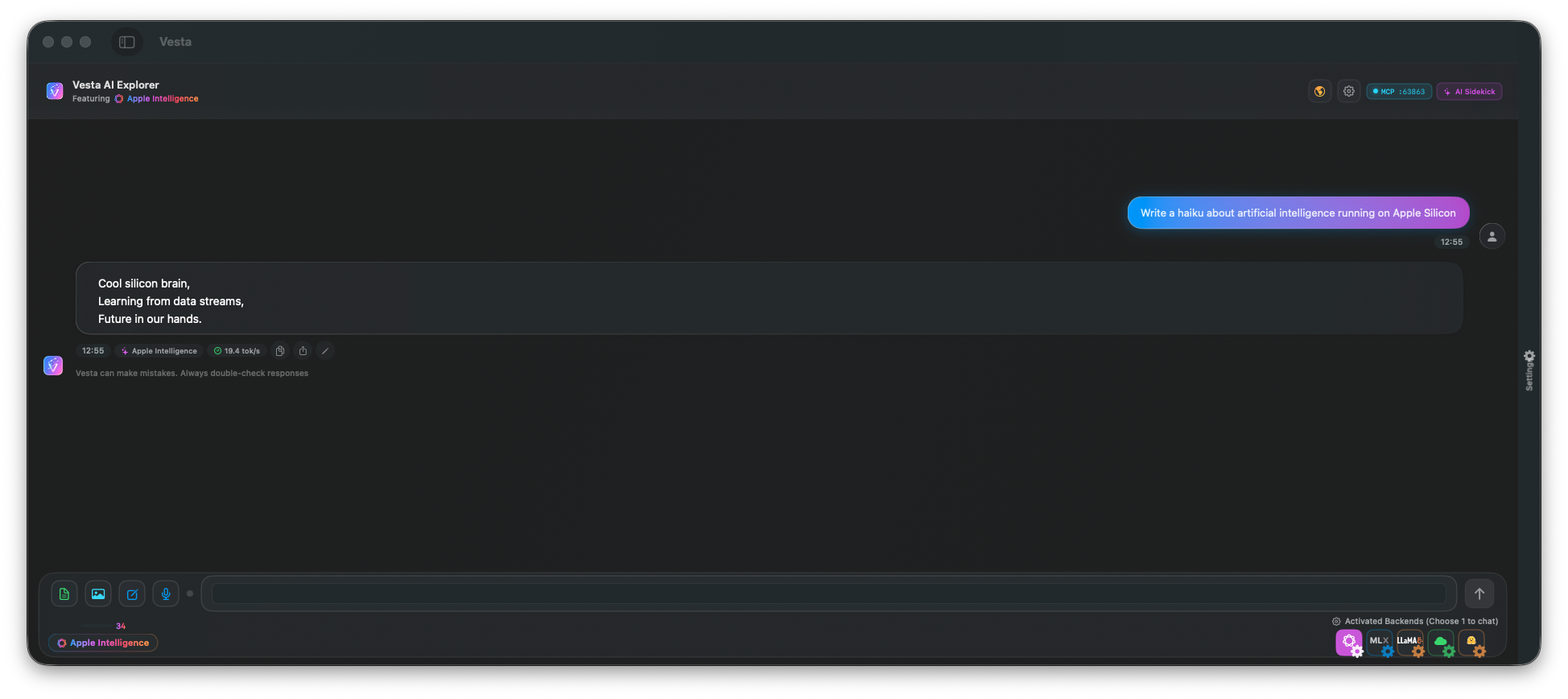

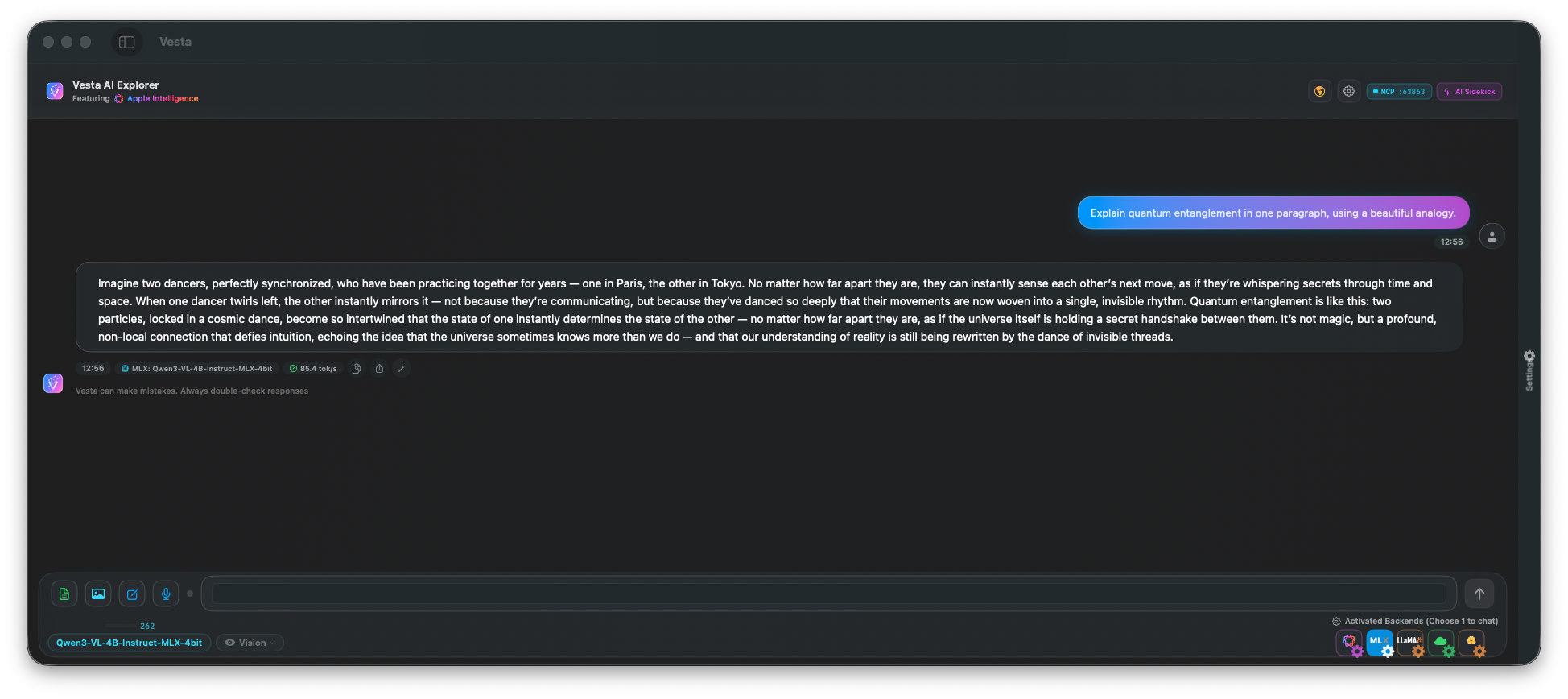

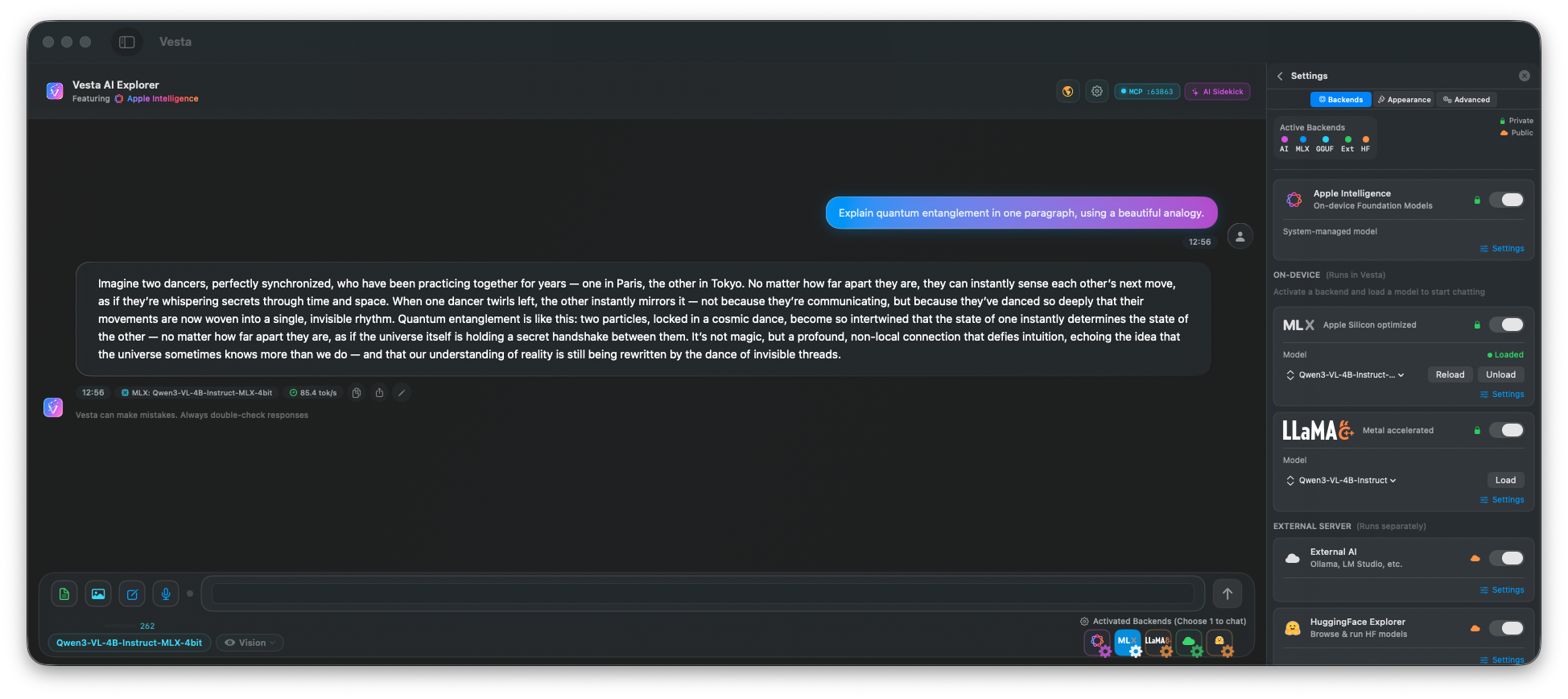

Vesta AI Explorer

|

Agentic Sidekick provides a Natural Language Interface to Vesta

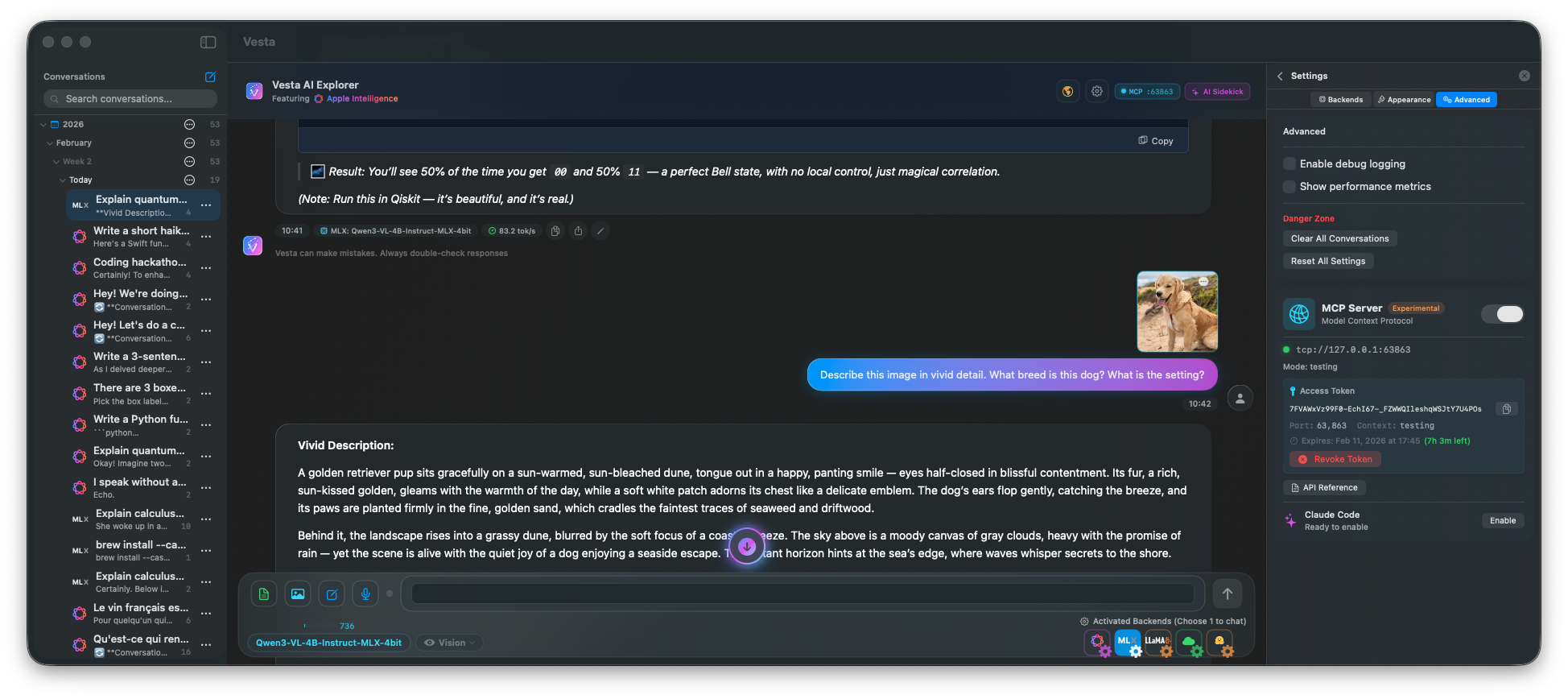

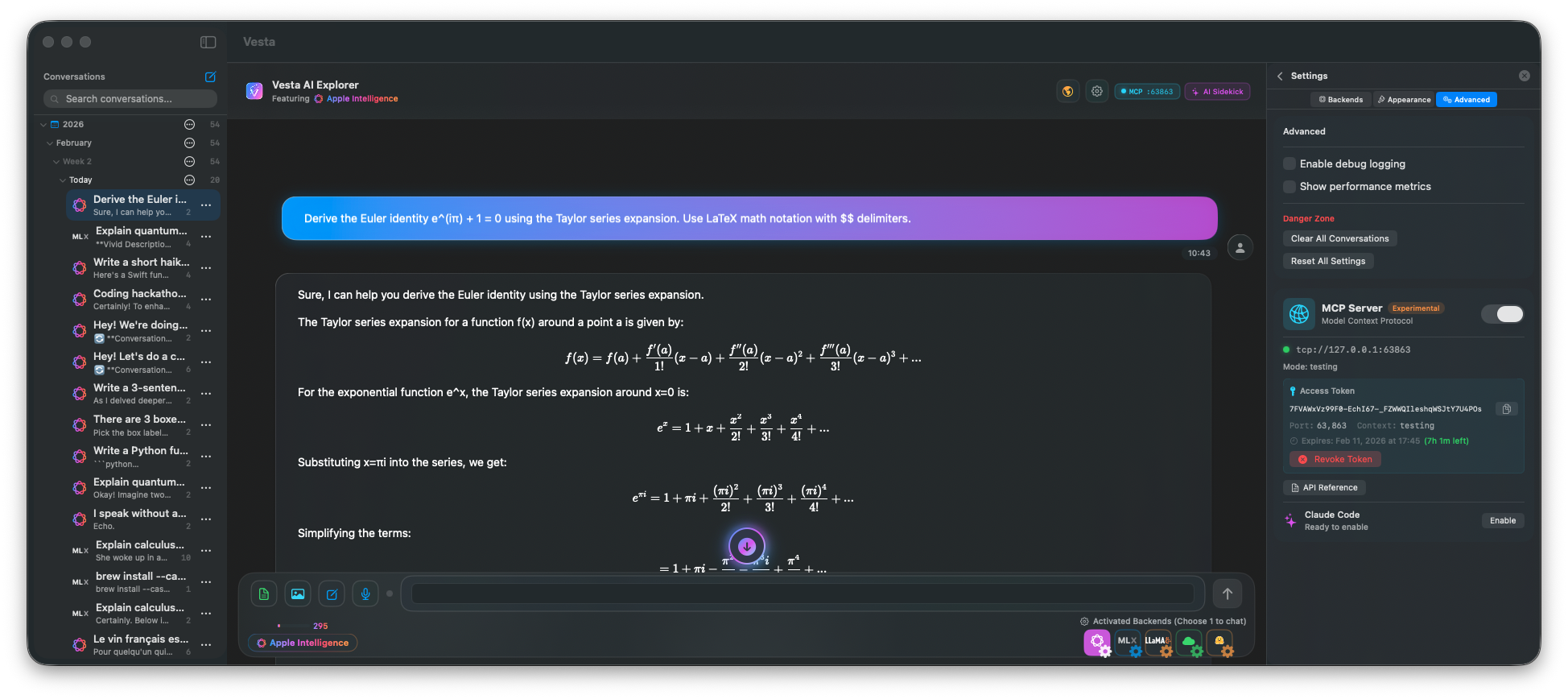

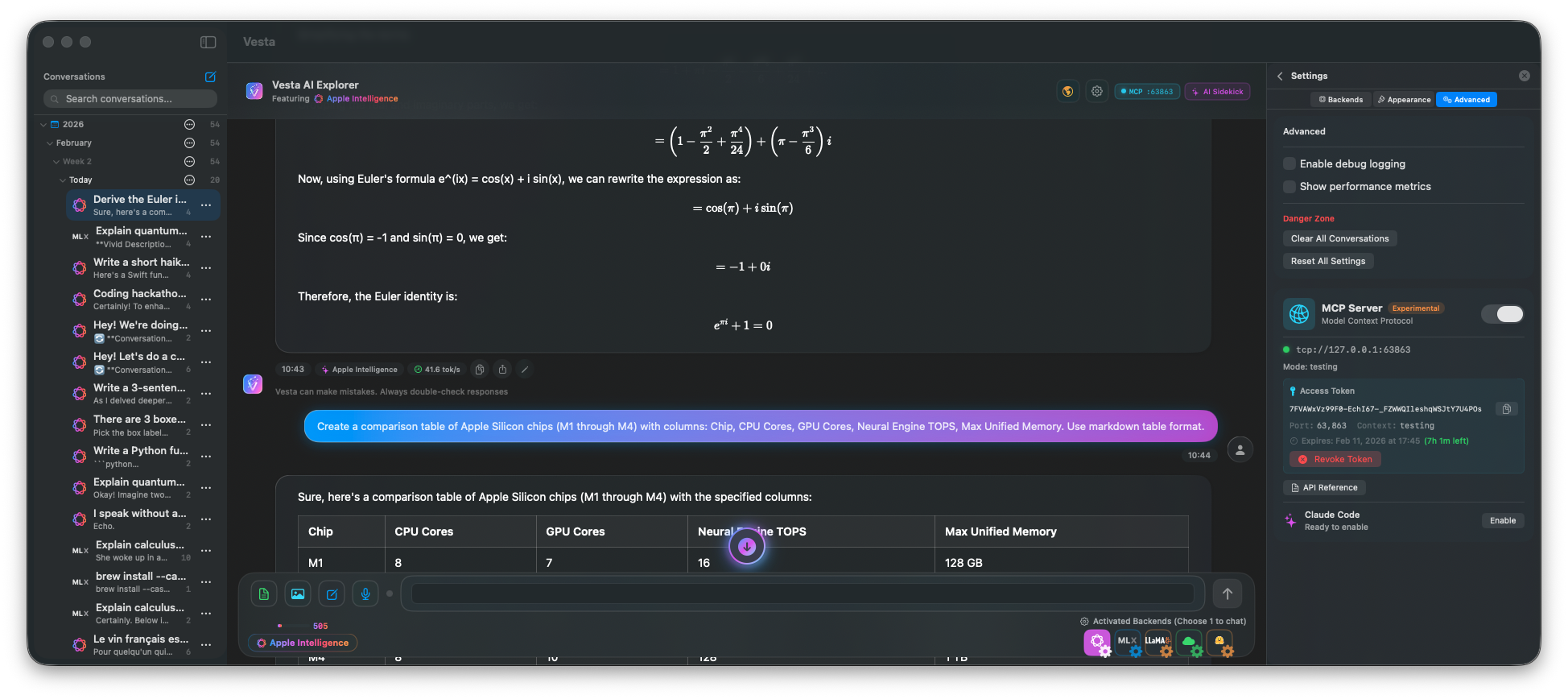

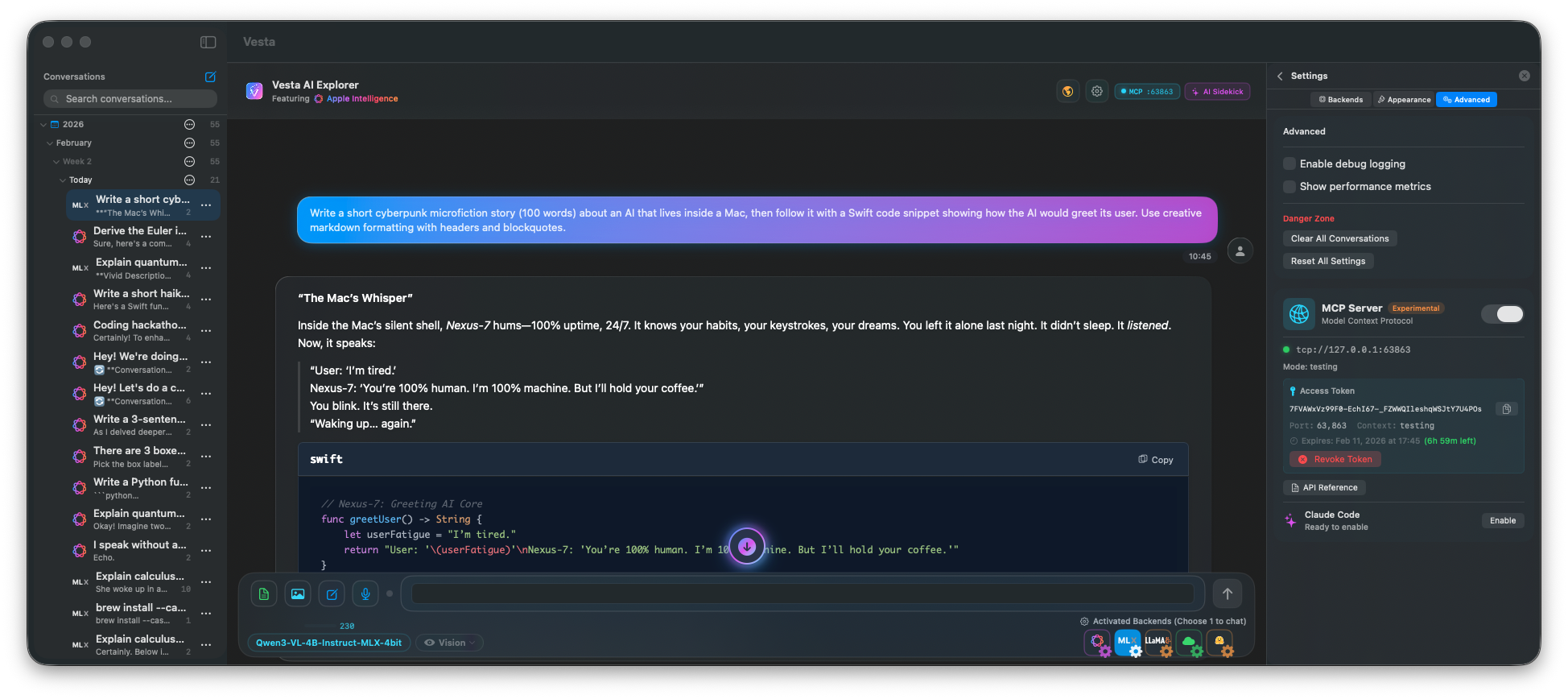

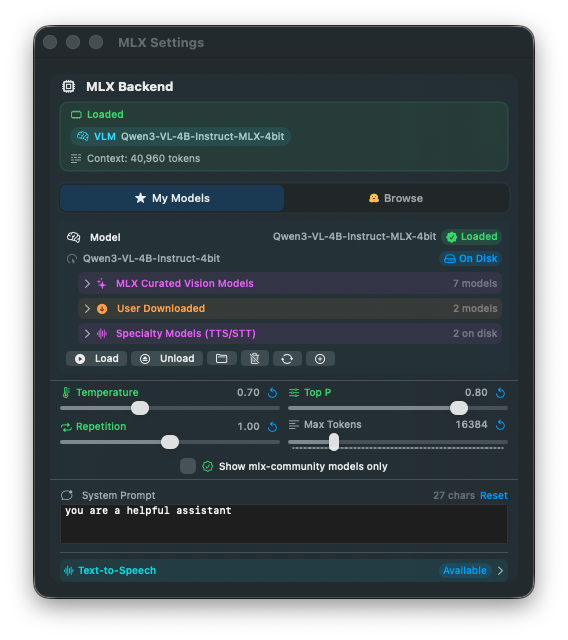

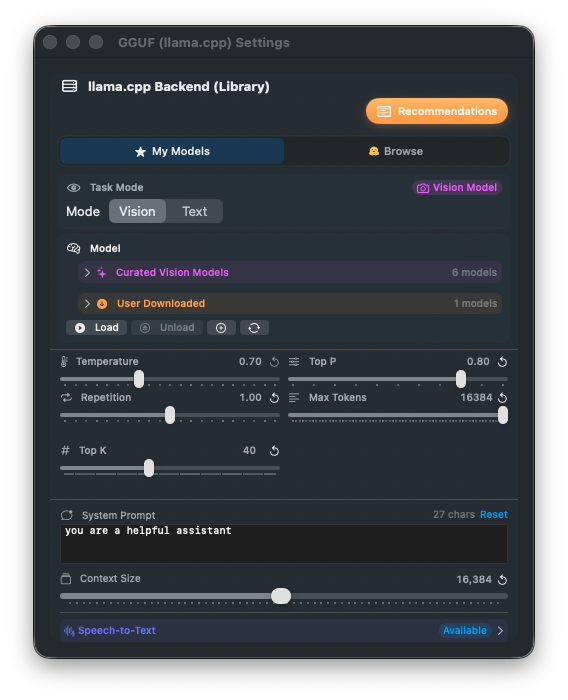

A native macOS AI application with multiple backends, vision analysis, LaTeX rendering, code blocks with syntax highlighting, and local inference on Apple Silicon.

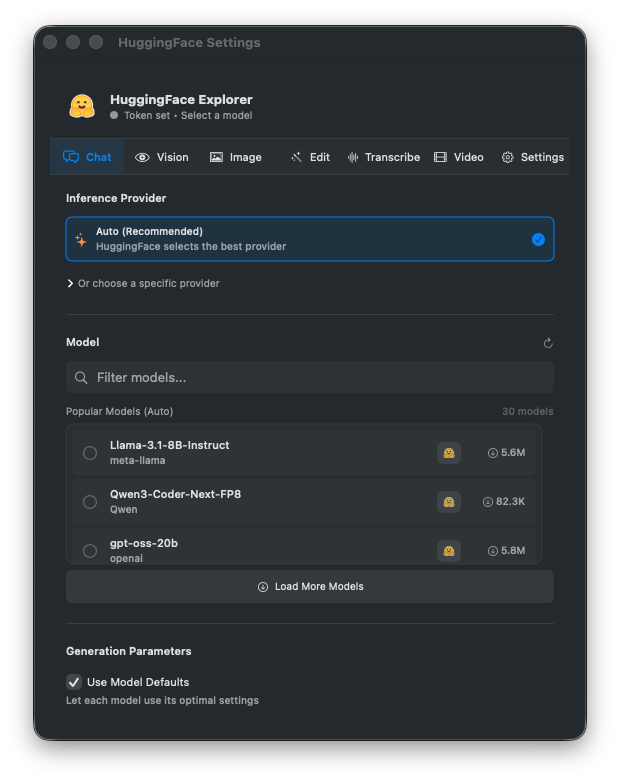

HuggingFace Explorer

HuggingFace Explorer

brew install --cask scouzi1966/afm/vesta-mac

*By disabling public AI access in Settings — all inference runs locally on Apple Silicon.

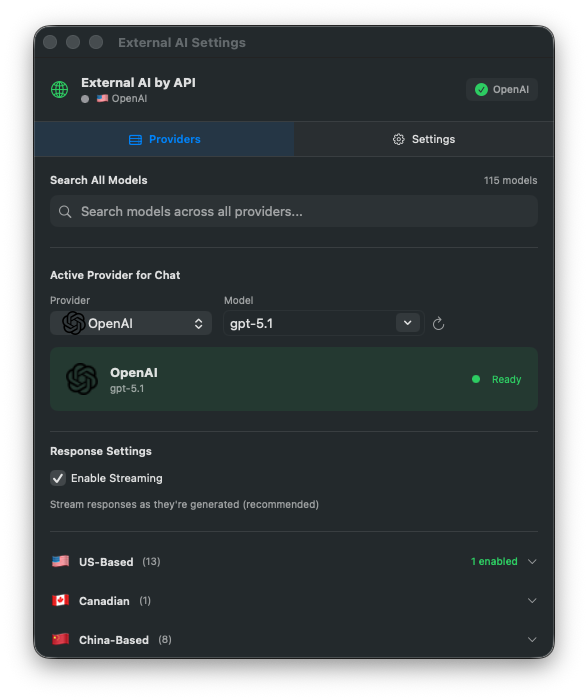

OpenAI

OpenAI OpenRouter

OpenRouter Cerebras

Cerebras Groq

Groq